5 Fitting the Model

With the data gathered, the next step is to estimate the model to get ability estimates for each team. Following the findings of the simulation study in Section 3, the game random intercept model will be used for the estimation.

5.1 Model estimation

The first step is to load in the data set and do some filtering using the dplyr package (Wickham & Francois, 2016).

library(dplyr)

load("_data/full_data.rda")To filter, I first remove any game that hasn’t been played (or was canceled) by removing any games that don’t have a score for either team. I also remove the competition field, as it is not necessary for the model estimation.

fit_data <- full_data %>%

filter(!is.na(h_goals), !is.na(a_goals)) %>%

select(-competition)Next, I filter the data to only include teams with a least 5 games played. This is to ensure that all teams included have a sufficient number of games to get reasonable estimates. This process is done iteratively to ensure that after removing teams with less than five games, teams that orginally had more than 5 games, but now no longer meet the criteria are also excluded.

filter_games <- TRUE

while(filter_games) {

team_counts <- table(c(fit_data$home, fit_data$away)) %>% as_data_frame() %>%

arrange(desc(n)) %>%

filter(n >= 5) %>%

select(team = Var1, games = n) %>%

arrange(team) %>%

mutate(code = seq_len(nrow(.)))

fit_data <- fit_data %>%

left_join(select(team_counts, -games), by = c("home" = "team")) %>%

rename(home_code = code) %>%

left_join(select(team_counts, -games), by = c("away" = "team")) %>%

rename(away_code = code) %>%

filter(!is.na(home_code), !is.na(away_code))

new_min <- table(c(fit_data$home, fit_data$away)) %>% as.numeric() %>% min()

if (new_min >= 5) {

filter_games <- FALSE

} else {

fit_data <- fit_data %>%

select(-home_code, -away_code)

}

}This filtering process leaves a total of 7811 games between 450 teams. The number of games for each team ranges from 5 to 62.

To estimate the model, the data has to be supplied to Stan in a list format. The names of the elements of the list should match the names of the input data specified in the Stan model (see Section 2.2.1 for Stan definition of the game random intercept model).

stan_data <- list(

num_clubs = nrow(team_counts),

num_games = nrow(fit_data),

home = fit_data$home_code,

away = fit_data$away_code,

h_goals = fit_data$h_goals,

a_goals = fit_data$a_goals,

homeg = fit_data$home_game

)In order to later assess model fit with posterior predictive model checks, a generated quantities section was added to the Stan model specification.

generated quantities {

int home_rep[num_games];

int away_rep[num_games];

for (g in 1:num_games) {

home_rep[g] = poisson_rng(exp(mu + (eta * homeg[g]) + alpha[home[g]] +

delta[away[g]] + gamma[g]));

away_rep[g] = poisson_rng(exp(mu + alpha[away[g]] + delta[home[g]] +

gamma[g]));

}

}This section attempt to replicated the original data. At each each iteration of the chain, the \(\lambda\) for each score within each game is calculated using the current values of the parameters. A random Poisson is then drawn for each team using the calculated \(\lambda\) values. Thus, the model is able to produce a posterior distribution for the scores of each game. These distribution can then be compared to the observed scores to see how well the model fits the data.

Finally, the model can be estimated using the Stan interface rstan (Guo et al., 2017). The model is estimated with 3 chains, each with 4000 iterations. The first 2000 iterations from each chain are discarded as burn in. Additionally, a thinning interval of 2 was used. These decisions were made to decrease computation time, and to limit the size of the result stanfit objects, which become quite large. This leaves 1000 iterations from each chain, for a total of 3000 iterations that will make up the final posterior distributions. As in the simulation study, the target proposal acceptance rate during the adaptation period was set to 0.99 (see Section 3.2; Stan Development Team, 2016c, 2016a).

library(rstan)

gri_stanfit <- stan(file = "_data/stan-models/gri_ppmc.stan", data = stan_data,

chains = 3, iter = 7000, warmup = 2000, init = "random", thin = 5,

cores = 3, algorithm = "NUTS", seed = 71715,

control = list(adapt_delta = 0.99, max_treedepth = 15))5.2 MCMC diagnostics

After estimating the model, before the parameters can be analyzed and inferences can be made, the model has to be checked to make sure it estimated correctly and completely. This diagnostic information is critical to Markov Chain Monte Carlo estimation, as with proper estimation, no valid inferences can be made. The code used to create the plot and tables used to display the diagnostic information can be seen in Appendix C.

5.2.1 Convergence

The first check is convergence. Convergence means that the MCMC chain found the high density area of the posterior distribution, and stayed there. When multiple chains are estimated, this can be done by verifying that the estimates from each chain end up in the same place. For a single chain, this means verifying that the chain is sampling roughly the same area at the beginning of the chain (after burn in) as in the end of the chain.

One way to assess convergence is through the \(\hat{R}\) statistic (Brooks & Gelman, 1997; A. Gelman et al., 2014). The \(\hat{R}\) statistic is also known as the potential scale reduction, and is a measure of how much variance there is between chains compared to the amount of variation within chains. Gelman and Rubin (1992) suggest that in order to conclude the model has successfully converged, all \(\hat{R}\) values should be less than 1.1.

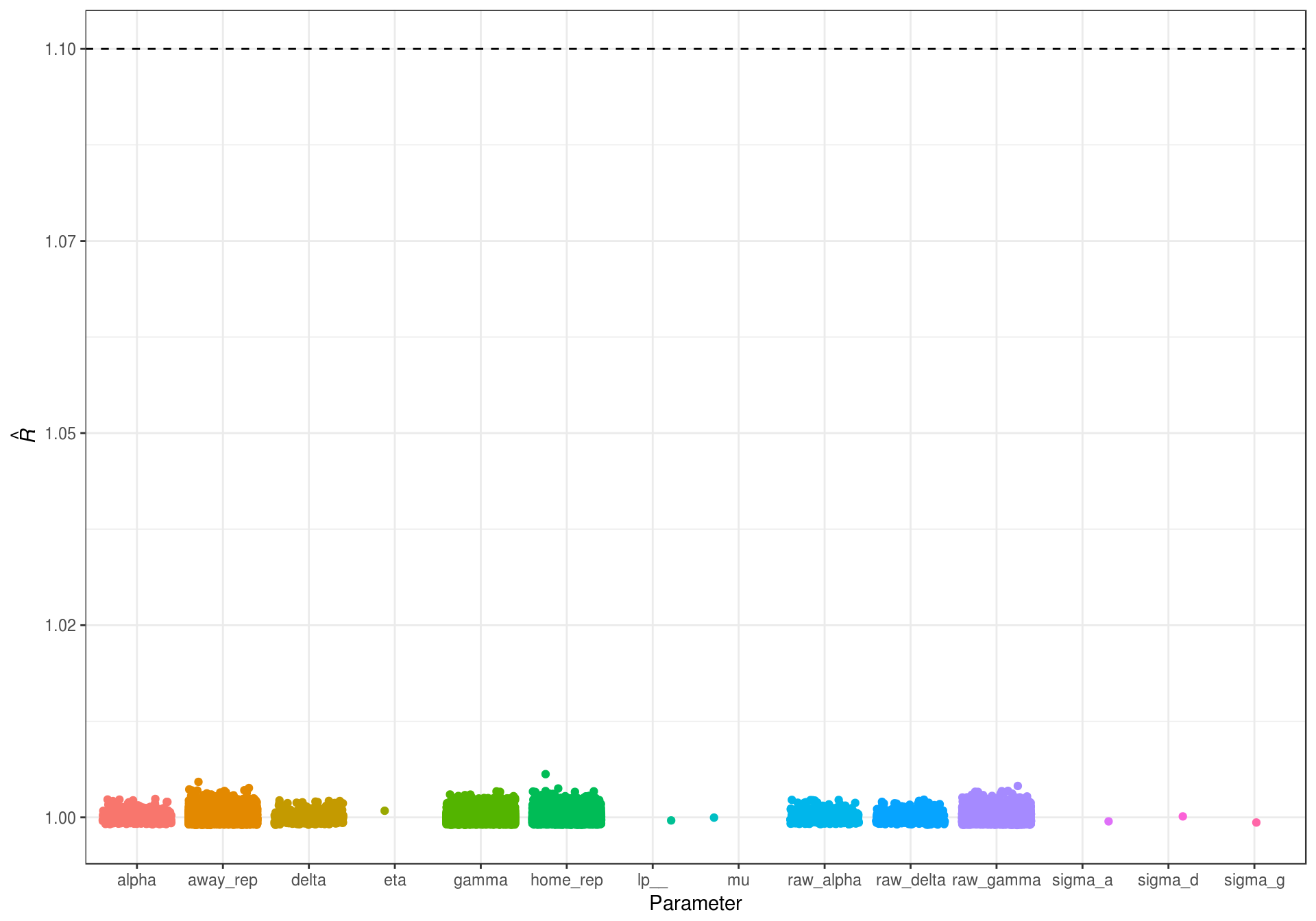

Figure 5.1: Rhat statistics for the estimated game random intercept model.

Figure 5.1 shows that all of the \(\hat{R}\) values are below the suggested cutoff of 1.1, indicating that the model has converged.

5.2.2 Efficiency

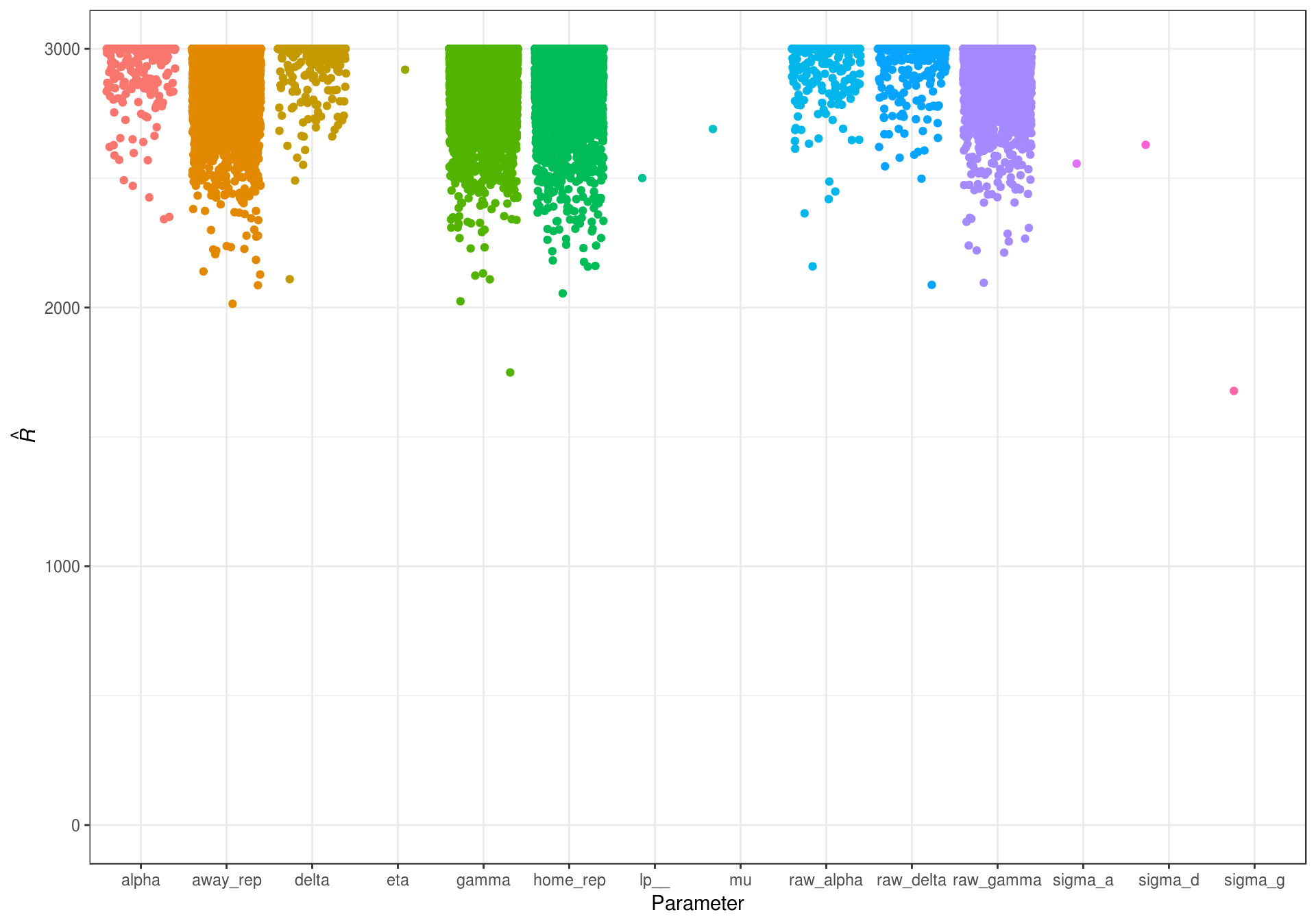

The second important check for MCMC estimation is the efficiency of the sampler. In other words, it is important to check that the algorithm adequately sampled the full posterior. There are several ways this can be examined. The first is by examing the effective sample size. This diagnostic takes into account the autocorrelation in the chain to determine the ‘effective’ number of independent draws from the posterior. If the chain is slow moving, the draws will be highly autocorrelated, and effective sample size will be well below the total number of iterations in the chain. However, low autocorrelations would indicate that the sampler is moving around the posterior fairly quickly, and the effictive sample size will be at or near the true sample size of the posterior. The effective sample size for all parameters can been seen in Figure 5.2.

Figure 5.2: Effective sample size for the estimated game random intercept model parameters.

There are also measure of efficiency that are exclusive the No U-Turn Sampler (NUTS; Hoffman & Gelman, 2014). For example the Bayesian Factor of Missing Information (BFMI) gives an estimate of how well the sampler adpated and explored the posterior distribution. The BFMI ranges from 0 to 1, with 0 and 1 representing poor and excellent estimation respectively. This is calculated for the chain overall (Betancourt, 2016).

| Chain | BFMI | Mean Acceptance Rate | Max Treedepth |

|---|---|---|---|

| Chain 1 | 0.847 | 0.986 | 7 |

| Chain 2 | 0.902 | 0.989 | 7 |

| Chain 3 | 0.922 | 0.983 | 6 |

The BFMI values in Table 5.1 indicate that the sampler was able to adequately visit the posterior distributions. Additionally, Table 5.1 shows the mean acceptance rate for each chain. As expected, these values are very close to the 0.99 that was specified when the model was estimated (control = list(adapt_delta = 0.99); Section 5.1). As noted in Sections 3.2 and 5.1, a target acceptance rate this high is needed to prevent divergent transitions. This occurs due to the small variances of \(\alpha\), \(\delta\), and \(\gamma\) that are estimated. The high target acceptance rate forces the sampler to take smaller steps, keeping the variances within reasonable ranges.

The concern with setting the target acceptance this high is that for parameters with wider posteriors, the sampler will not be able to move fast enough. In the NUTS algorithm, at each iteration, the sampler looks for a place to “U-Turn” in a series of possible branches. If the sampler is terminating before the maximum possible tree depth (set to 15; see Section 5.1), then the algorithm is able to adequately find good values for the next iteration of the chain. Bumping up against the maximum allowed tree depth, or going beyond it, indicates that stepsize is too small (Stan Development Team, 2016a, 2016b). Because the Max Treedepth values in Table 5.1 are all below the maximum specified, and the BFMI values are close to 1, there is strong evidence that the sampler was indeed able to adequately sample the posteriors.

5.3 Model fit

To assess model fit, I will use posterior predictive model checks. Posterior predictive checks involve simulating replications of the data using the values of the Markov chain, and then comparing the replicated data to the observed data (A. Gelman et al., 2014). This means that replicated data sets take into account the uncertainty in the parameter estimates, as a new replicated data set is created at each iteration of the Markov chain. These data sets can then be used to look for systematic differences in the characterics of the observed and simulated data, often through visualizations (A. Gelman & Hill, 2006).

The first step is to extract the replicated data sets from the stanfit object using the purrr (Wickham, 2016b) and tidyr (Wickham, 2017) packages.

library(purrr)

library(tidyr)

home_rep <- rstan::extract(gri_stanfit, pars = "home_rep",

permuted = TRUE)$home_rep

away_rep <- rstan::extract(gri_stanfit, pars = "away_rep",

permuted = TRUE)$away_rep

home_rep <- t(home_rep) %>%

as_data_frame() %>% as.list()

away_rep <- t(away_rep) %>%

as_data_frame() %>% as.list()

counter <- seq_along(home_rep)

rep_data <- pmap_df(.l = list(h = home_rep, a = away_rep, c = counter),

.f = function(h, a, c) {

data_frame(

replication = c,

game = seq_len(length(h)),

home_score = h,

away_score = a

)

})5.3.1 Score distributions

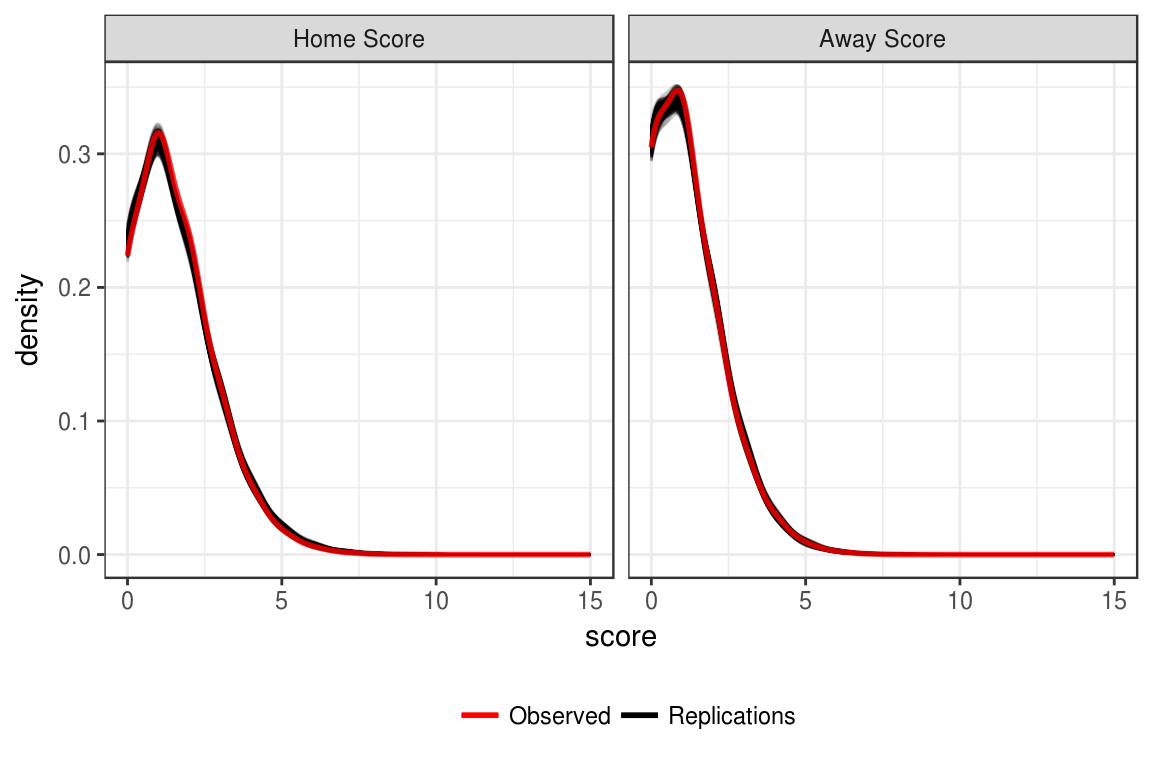

The first posterior predictive check to be examined is the distribution of home and away scores. For each replicated data set there is a distribution of goals scored by the home and away teams. To compare to the observed data, we can plot each of these distributions, and then overlay the distribution from the observed data using ggplot2 (Wickham & Chang, 2016). The code for Figure 5.3 can be see in Appendix C.4.

Figure 5.3: Recovery of observed score distributions.

Figure 5.3 shows that the observed score distributions for both the home and away scores are very similar to what is seen in the replicated data sets. Thus, this provides evidence that the model is able to recover the observed distributions.

5.3.2 Margin of victory intervals

It is also possible to examine the margin of victory for each game. In each of the replicated data sets, the home team’s margin of victory for every game can be calculated as replicated home score minus the replicated away score. Doing this for every replication creates a posterior distribution for the home team’s margin of victory in every game. From the posterior we can create credible intervals (Appendix C.5) and determine how often the observed margin of victory falls outside the credible interval.

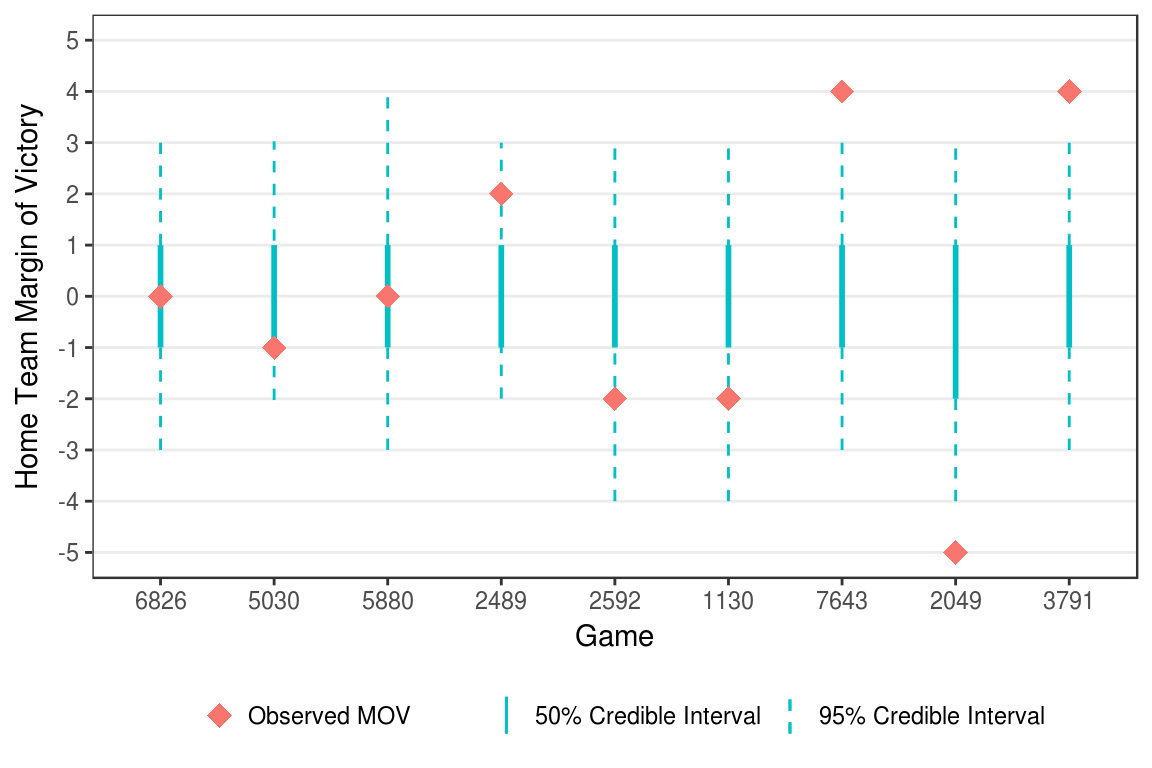

Figure 5.4: Example credible interval for game margin of victories.

Figure 5.4 shows examples of games where the observed margin of victory fell inside the 50% credible interval, inside the 95% credible interval, and outside both intervals. Overall, for the 7811 games included in the estimation, the observed margin of victory fell within the 50% credible interval 70.2 percent of the time and within the 95% credible 98.0 percent of the time.

5.3.3 Prediction error

Another posterior check that can be looked at is prediction accuracy. For each replication, whether the home team won, lost, or tied can be determined for each game. Across replications, it is then possible to look at the probability of the home teaming experiencing a given outcome in each game. The probabilities can then be compared to the observed outcome to determine how accurate the predictions were. The accuracy can be determined by using a binary loss function (was the most likely outcome the observed outcome), or a log loss function (how far from the observed outcome was the probability of that outcome).

The first step is to get the probability of the home team winning, tieing, and losing each game.

outcomes <- rep_data %>%

mutate(mov = home_score - away_score) %>%

group_by(game) %>%

summarize(

win_prob = length(which(mov > 0)) / n(),

tie_prob = length(which(mov == 0)) / n(),

loss_prob = length(which(mov < 0)) / n()

) %>%

mutate(

most_likely = ifelse(win_prob > tie_prob & win_prob > loss_prob, "win",

ifelse(tie_prob > win_prob & tie_prob > loss_prob, "tie", "loss"))

) %>%

mutate(

obs_mov = fit_data$h_goals - fit_data$a_goals,

outcome = ifelse(obs_mov > 0, "win", ifelse(obs_mov == 0, "tie", "loss"))

)Using the predictions from the replicated data sets, the model gave the observed outcome the highest probability in 53.7 percent of the games.

One problem with this approach is that it doesn’t take into the actual values of the probabilities. For example, take two games where the home team won. In the first game, the home team had a 60 percent chance of winning. In the second game, the home teams had a 90 percent chance of winning. In both cases, the most likely outcome matches the observed outcome, so both instances are assigned a one in the binary loss function as a correct prediction. Alternatively, we could use the log loss function to look at how far the probability was from the observed event (Altun, Johnson, & Hofmann, 2003). In this example, the second game would be a better prediction, and have a lower log loss, because a probability of 0.9 is closer to the observed outcome (1) than a probability of 0.6.

The log loss for multiple games is defined in equation (5.1).

\[\begin{equation} logloss = - \frac{1}{N}\sum_{i=1}^N\sum_{j=1}^My_{ij}\log(p_{ij}) \tag{5.1} \end{equation}\]In equation (5.1), \(N\) is number of observations (in this case the number of games), \(M\) is the number of outcomes (for soccer games this is 3: win, loss, and tie), \(y_{ij}\) is a binary indicator of whether outcome \(M\) occured for observation \(N\) (1) or not (0), and \(p_{ij}\) is the probability of outcome \(M\) for observation \(N\). Thus, the log loss for a set of predictions is the log of the probability of the observed outcomes, summed over all observations. In the case of perfect predictions, the probability of the observed outcome would be 1, and the log probability would be 0. This means that the closer the log loss is to 0, the better the predictions are (Roy & McCallum, 2001).

To calculate the log loss for the estimated game random intercept model, I define a function that takes in a matrix of predictions and a matrix of outcomes.

logloss <- function(pred, obs){

eps <- 1e-15

pred <- pmin(pmax(pred, eps), 1 - eps)

(-1 / nrow(obs)) * sum(obs * log(pred) + (1 - obs) * log(1 - pred))

}Then, matrices of predictions and outcomes can be created, and the log loss can be calculated.

predictions <- outcomes %>%

select(win_prob, tie_prob, loss_prob) %>%

as.matrix()

observations <- outcomes %>%

mutate(

obs_win = ifelse(outcome == "win", 1, 0),

obs_tie = ifelse(outcome == "tie", 1, 0),

obs_loss = ifelse(outcome == "loss", 1, 0)

) %>%

select(obs_win, obs_tie, obs_loss) %>%

as.matrix()

avg_logloss <- logloss(pred = predictions, obs = observations)The log loss for predictions from the replicated data sets is 1.716. Converting back to a probability scale, on average, the probability of the observed outcome was off by 0.18. In isolation, the log loss can be hard to interpret. Instead, it is often useful to compare to baseline models.

| Model | Log Loss |

|---|---|

| Game Random Intercept | 1.72 |

| Data Average | 1.86 |

| Equal Probabilities | 1.91 |

| Home Win | 37.70 |

Table 5.2 shows the log loss for a variety of models. In the data average model, the probability of each outcome is set to the overall average for the entire data set. In the equal probabilities model, the probability for each outcome is set to 0.33. Finally, in the home win model, the probability of the home team winning is set to 1 and the probability of the other outcomes is set to 0. The posterior predictive probabilities from the game random intercept model out perform all of these baseline models.

5.3.4 Posterior predictive check summary

Overall, the posterior predictive model checks indicate adequate model fit. The model is able to accurately recover the distributions of scores for both the home and away teams (Figure 5.3). Additionally, when looking at individual games, the credible intervals for the margin of victory are able to consistently capture the observed margin of victory. Finally, the prediction error shows that the model’s predictions are able to more accurately pick game outcomes than a variety of baseline models.

Taken in totality, there is sufficient evidence of model fit for us to proceed with the analysis and examine the posterior distributions of the parameter estimates.

5.4 Results

A ranking of the teams can be created by the goals they would be expected to score and concede against an average team at a neutral location. The larger the difference between these expected goals, the better team. First we pull out the parameters we need from the model.

params <- rstan::extract(gri_stanfit, pars = c("mu", "alpha", "delta"))

alpha <- colMeans(params$alpha)

delta <- colMeans(params$delta)

mu <- mean(params$mu)Then we can compute the expected offense, defense, and margin for each team to create the rankings.

rankings <- data_frame(

Club = team_counts$team,

Attacking = alpha,

Defense = delta

) %>%

mutate(

`Expected Offense` = exp(mu + alpha),

`Expected Defense` = exp(mu + delta),

`Expected Margin` = `Expected Offense` - `Expected Defense`

) %>%

arrange(desc(`Expected Margin`)) %>%

mutate(

Attacking = formatC(Attacking, digits = 3, drop0trailing = FALSE,

format = "f"),

Defense = formatC(Defense, digits = 3, drop0trailing = FALSE,

format = "f"),

`Expected Offense` = formatC(`Expected Offense`, digits = 2,

drop0trailing = FALSE, format = "f"),

`Expected Defense` = formatC(`Expected Defense`, digits = 2,

drop0trailing = FALSE, format = "f"),

`Expected Margin` = formatC(`Expected Margin`, digits = 2,

drop0trailing = FALSE, format = "f")

)As we might expect, the top of the list is dominated by team leading the best European leagues and having success in the Champions League. Barcelona comes in as the top team in rankings, followed by Bayern Munich, Paris Saint-Germain, Real Madrid, and AS Monaco to round out the top 5. The top offense belongs to Barcelona according to the model, while FC Copenhagen boasts the best defense.

DT::datatable(select(rankings, -Club), rownames = rankings$Club,

options = list(pageLength = 10, scrollX = TRUE,

columnDefs = list(list(className = 'dt-center', targets = 1:5))),

caption = "Club rankings from game random intercept model")